ASCII: Converting Symbols to Binary

The American Standard Code for Information Interchange (ASCII) was an early standardized encoding system for text.

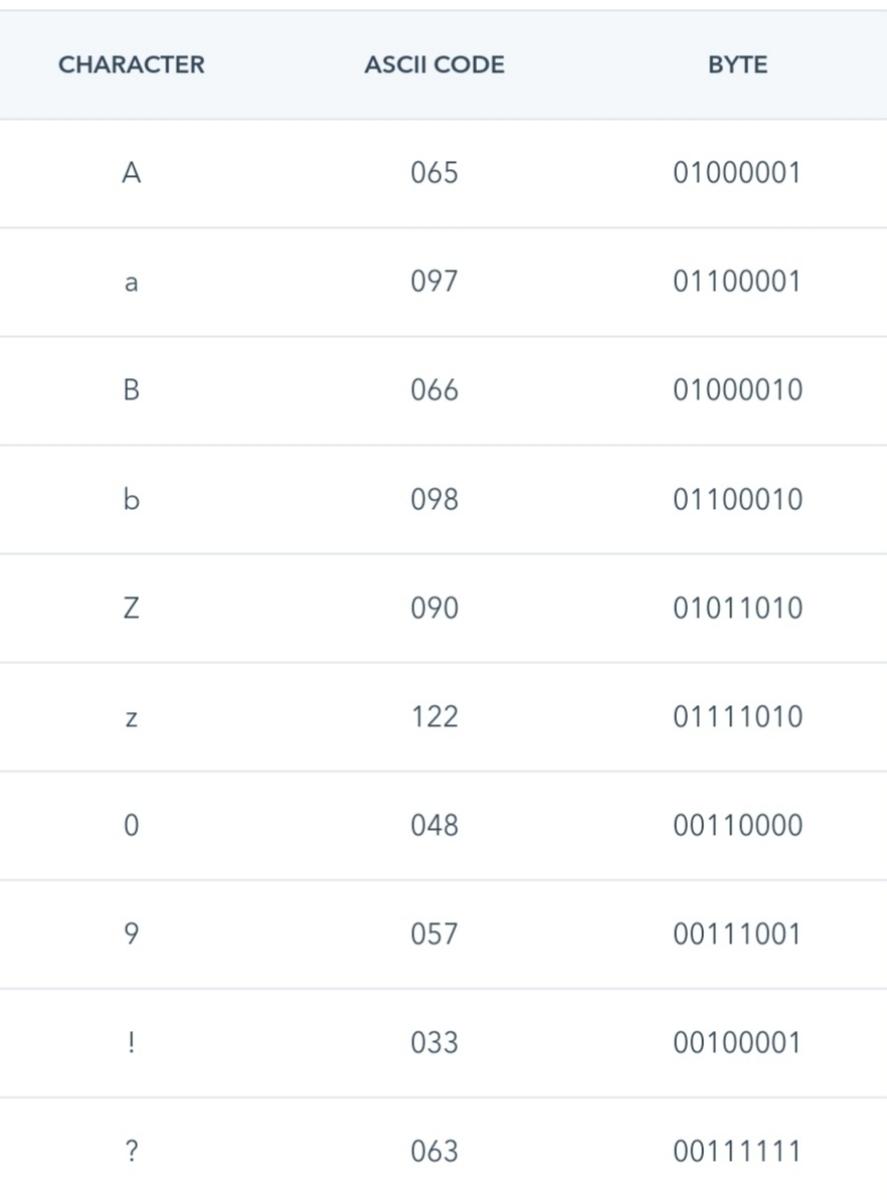

ASCII’s library includes every upper-case and lower-case letter in the Latin alphabet, every digit from 0 to 9, and some symbols (like /, !, and ?). It assigns each of these characters a unique three-digit code and a unique byte.

But ASCII is so limited, it gives us 256 different bytes, or 256 ways to represent a character. When ASCII was introduced in 1960, this was okay, since developers needed only 128 bytes to represent all the English characters and symbols they needed.

7

70 reads

The idea is part of this collection:

Learn more about computerscience with this collection

Understanding machine learning models

Improving data analysis and decision-making

How Google uses logic in machine learning

Related collections

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates