However, LLMs also have limitations. They can generate incorrect or biased information if the data they were trained on is biased. They also do not truly "understand" language or concepts in the way humans do; they are just very good at predicting text patterns.

57

492 reads

CURATED FROM

IDEAS CURATED BY

Learn about Large language models

“

Similar ideas

The thinking problem

Our brains must make sense of the confusing world around us by processing a never-ending stream of information. Ideally, our brains would analyze everything thoroughly. However, they cannot, because it is too impractical.

- Thinking takes time, and our decision...

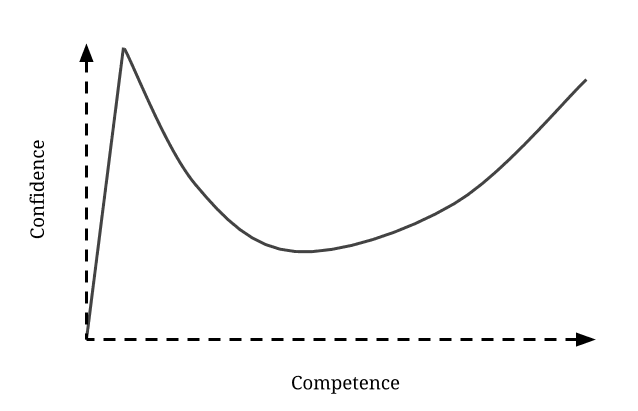

The Blindspot Of Ignorance And Incompetence

Humans are not very good at self-evaluation and may be unaware of how ignorant they are. This psychological deficiency is known as the Dunning-Kruger Effect, where an illusory superiority clouds the individual and forms a cognitive bias which makes them hold many overly ...

Tools To Manage The Cognitive Load

- Grouping or chunking of various pieces of information into different sections, making them easier to retrieve and remember.

- Making mind maps or process maps, and also thinking in maps making constructive associations and flow-charts.

- Clear...

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates