12 Risks and Dangers of Artificial Intelligence (AI)

Curated from: builtin.com

Ideas, facts & insights covering these topics:

15 ideas

·368 reads

3

Explore the World's Best Ideas

Join today and uncover 100+ curated journeys from 50+ topics. Unlock access to our mobile app with extensive features.

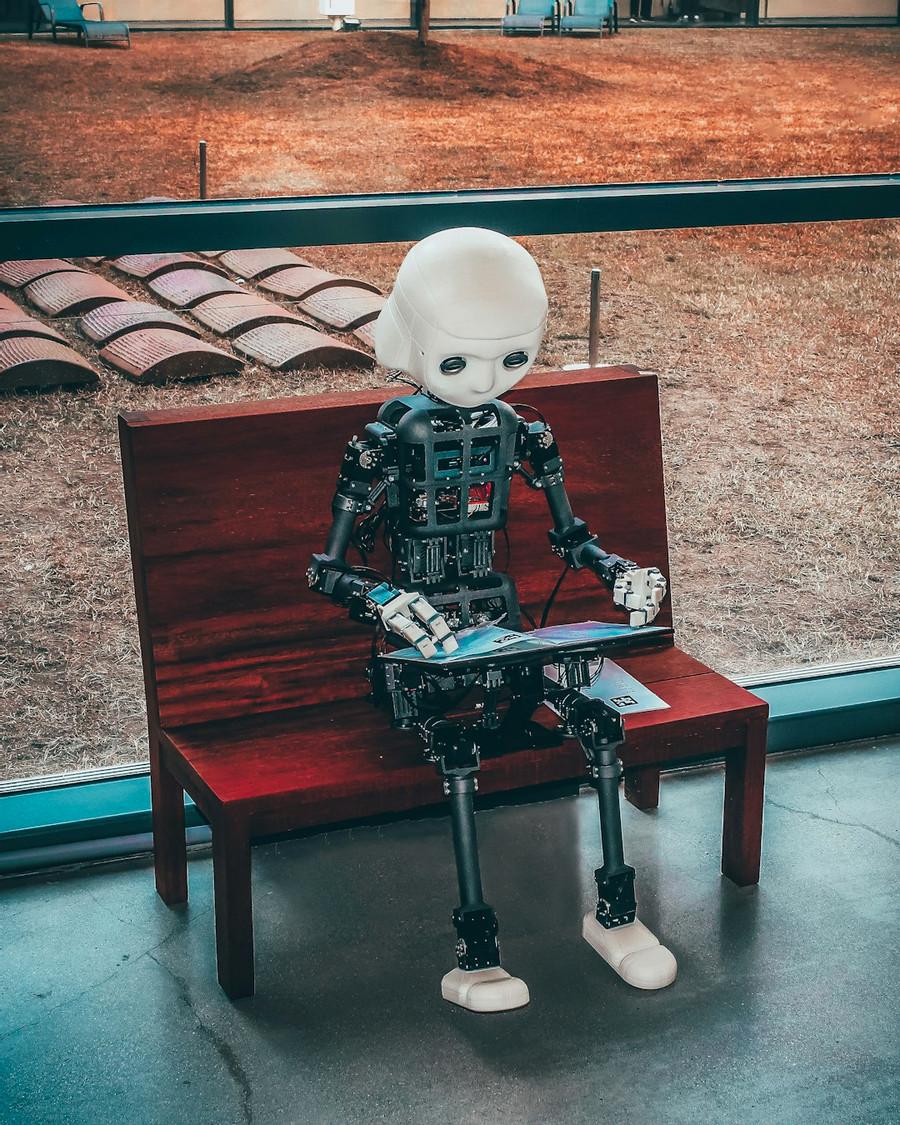

AI - Good Or Bad

As AI grows more sophisticated and widespread, the voices warning against the potential dangers of artificial intelligence grow louder.

“These things could get more intelligent than us and could decide to take over, and we need to worry now about how we prevent that happening,” said Geoffrey Hinton , known as the “Godfather of AI” for his foundational work on machine learning and neural network algorithms. In 2023, Hinton left his position at Google so that he could “talk about the dangers of AI ,” noting a part of him even regrets his life’s work .

7

47 reads

Tesla and SpaceX founder Elon Musk, along with over 1,000 other tech leaders, urged in a 2023 open letter to put a pause on large AI experiments, citing that the technology can “pose profound risks to society and humanity.”

7

43 reads

Risks of Artificial Intelligence

- Automation-spurred job loss

- Deepfakes

- Privacy violations

- Algorithmic bias caused by bad data

- Socioeconomic inequality

- Market volatility

- Weapons automatization

- Uncontrollable self-aware AI

Whether it’s the increasing automation of certain jobs , gender and racially biased algorithms or autonomous weapons that operate without human oversight (to name just a few), unease abounds on a number of fronts. And we’re still in the very early stages of what AI is really capable of.

7

46 reads

Is AI Dangerous?

The tech community has long debated the threats posed by artificial intelligence. Automation of jobs, the spread of fake news and a dangerous arms race of AI-powered weaponry have been mentioned as some of the biggest dangers posed by AI.

7

32 reads

Lack of AI Transparency and Explainability

AI and deep learning models can be difficult to understand, even for those that work directly with the technology . This leads to a lack of transparency for how and why AI comes to its conclusions, creating a lack of explanation for what data AI algorithms use, or why they may make biased or unsafe decisions. These concerns have given rise to the use of explainable AI , but there’s still a long way before transparent AI systems become common practice.

7

30 reads

2. JOB LOSSES DUE TO AI AUTOMATION

AI-powered job automation is a pressing concern as the technology is adopted in industries like marketing , manufacturing and healthcare . By 2030, tasks that account for up to 30 percent of hours currently being worked in the U.S. economy could be automated — with Black and Hispanic employees left especially vulnerable to the change — according to McKinsey . Goldman Sachs even states 300 million full-time jobs could be lost to AI automation.

“The reason we have a low unemployment rate, which doesn’t actually capture people that aren’t looking for work, is largely that lower-wage service sector jobs have been pretty robustly created by this economy,” futurist Martin Ford told Built In. With AI on the rise, though, “I don’t think that’s going to continue.”

As AI robots become smarter and more dexterous, the same tasks will require fewer humans. And while AI is estimated to create 97 million new jobs by 2025 , many employees won’t have the skills needed for these technical roles and could get left behind if companies don’t upskill their workforces .

“If you’re flipping burgers at McDonald’s and more automation comes in, is one of these new jobs going to be a good match for you?” Ford said. “Or is it likely that the new job requires lots of education or training or maybe even intrinsic talents — really strong interpersonal skills or creativity — that you might not have? Because those are the things that, at least so far, computers are not very good at.”

7

19 reads

“The reason we have a low unemployment rate, which doesn’t actually capture people that aren’t looking for work, is largely that lower-wage service sector jobs have been pretty robustly created by this economy,” futurist Martin Ford told Built In.

As AI robots become smarter and more dexterous, the same tasks will require fewer humans. And while AI is estimated to create 97 million new jobs by 2025 , many employees won’t have the skills needed for these technical roles and could get left behind if companies don’t upskill their workforces .

7

16 reads

“If you’re flipping burgers at McDonald’s and more automation comes in, is one of these new jobs going to be a good match for you?” Ford said. “Or is it likely that the new job requires lots of education or training or maybe even intrinsic talents — really strong interpersonal skills or creativity — that you might not have? Because those are the things that, at least so far, computers are not very good at.”

Even professions that require graduate degrees and additional post-college training aren’t immune to AI displacement.

7

16 reads

SOCIAL MANIPULATION THROUGH AI ALGORITHMS

Social manipulation also stands as a danger of artificial intelligence. This fear has become a reality as politicians rely on platforms to promote their viewpoints, with one example being Ferdinand Marcos, Jr., wielding a TikTok troll army to capture the votes of younger Filipinos during the Philippines’ 2022 election.

TikTok, which is just one example of a social media platform that relies on AI algorithms , fills a user’s feed with content related to previous media they’ve viewed on the platform.

7

18 reads

Criticism of the app targets this process and the algorithm’s failure to filter out harmful and inaccurate content, raising concerns over TikTok’s ability to protect its users from misleading information.

Online media and news have become even murkier in light of AI-generated images and videos, AI voice changers as well as deepfakes infiltrating political and social spheres. These technologies make it easy to create realistic photos, videos, audio clips or replace the image of one figure with another in an existing picture or video.

7

17 reads

SOCIAL SURVEILLANCE WITH AI TECHNOLOGY

In addition to its more existential threat, Ford is focused on the way AI will adversely affect privacy and security. A prime example is China’s use of facial recognition technology in offices, schools and other venues. Besides tracking a person’s movements, the Chinese government may be able to gather enough data to monitor a person’s activities, relationships and political views.

Another example is U.S. police departments embracing predictive policing algorithms to anticipate where crimes will occur.

7

18 reads

The problem is that these algorithms are influenced by arrest rates, which disproportionately impact Black communities . Police departments then double down on these communities, leading to over-policing and questions over whether self-proclaimed democracies can resist turning AI into an authoritarian weapon.

“Authoritarian regimes use or are going to use it,” Ford said. “The question is, How much does it invade Western countries, democracies, and what constraints do we put on it?”

7

17 reads

Lack of Data Privacy Using AI Tools

AI systems often collect personal data to customize user experiences or to help train the AI models you’re using (especially if the AI tool is free). Data may not even be considered secure from other users when given to an AI system, as one bug incident that occurred with ChatGPT in 2023 “allowed some users to see titles from another active user’s chat history.” While there are laws present to protect personal information in some cases in the United States, there is no explicit federal law that protects citizens from data privacy harm experienced by AI.

7

17 reads

BIASES DUE TO AI

Various forms of AI bias are detrimental too. Speaking to the New York Times , Princeton computer science professor Olga Russakovsky said AI bias goes well beyond gender and race . In addition to data and algorithmic bias (the latter of which can “amplify” the former) AI is developed by humans — and humans are inherently biased

“A.I. researchers are primarily people who are male, who come from certain racial demographics, who grew up in high socioeconomic areas, primarily people without disabilities,” Russakovsky said “We’re a fairly homogeneous population, so it’s a challenge to think broadly

7

14 reads

The limited experiences of AI creators may explain why speech-recognition AI often fails to understand certain dialects and accents, or why companies fail to consider the consequences of a chatbot impersonating notorious figures in human history. Developers and businesses should exercise greater care to avoid recreating powerful biases and prejudices that put minority populations at risk.

7

18 reads

IDEAS CURATED BY

Hi! I'm Anu, and I am in love with reading, writing and being creative. This is a space for me to do all that and more and share it with the worId. The idea of spreading love, peace and happiness around keeps me going ♥️

CURATOR'S NOTE

With AI getting a new boost every year, the downsides of AI arent being spoken of enough, do check iut the complete article to read more about the dangers of AI

“

Similar ideas

5 ideas

What is artificial intelligence?

sciencefocus.com

9 ideas

7 Unexpected Benefits from the Role of AI in Marketing

swisscognitive.ch

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates