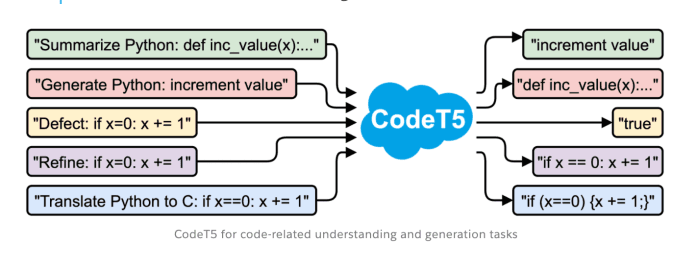

The Salesforce team has created and open-sourced a new identifier-aware unified pre-trained encoder-decoder model called CodeT5 . So far, they have demonstrated state-of-the-art results in multiple code-related downstream tasks such as understanding and generation across various directions, including PL to NL, NL to PL, and from one programming language to another.

CodeT5 is built on a similar architecture to Google’s T5 framework. It reframes text-to-text, where the input and output data are always strings of texts. This allows any task to be applied by this uninformed model.

4

14 reads

The idea is part of this collection:

Learn more about artificialintelligence with this collection

Find out the challenges it poses

Learn about the potential impact on society

Understanding the concept of Metaverse

Related collections

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates