The research team of CodeT5 had over 8.35 million examples to train the AI on, including user-written comments from open source GitHub repositories. While training, the largest and most capable version of CodeT5, which had 220 million parameters, took 12 days on a cluster of 16 Nvidia A100 GPUs 40GB memory.

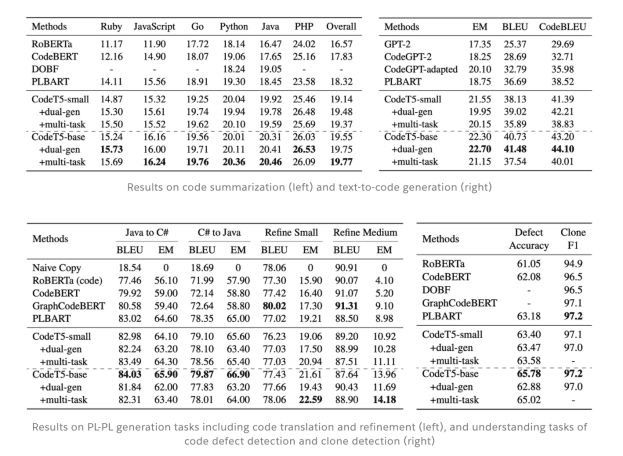

CodeT5 achieves state-of-the-art (SOTA) performance on fourteen subtasks in a code intelligence benchmark CodeXGLUE [3], as shown in the following tables.

4

14 reads

The idea is part of this collection:

Learn more about artificialintelligence with this collection

Find out the challenges it poses

Learn about the potential impact on society

Understanding the concept of Metaverse

Related collections

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates