Performance

- Fast full-text search - Because it's built on top of Lucene

- Near real-time indexing - It takes under 1s for newly indexed documents to show up in searches

- High performance & Fault tolerance - Each index is split into shards, that are distributed and replicated across servers. This enables Elasticsearch to process large volumes of data in parallel and remain available in case of hardware failure.

11

66 reads

CURATED FROM

IDEAS CURATED BY

Alt account of @ocp. I use it to stash ideas about software engineering

The idea is part of this collection:

Learn more about computerscience with this collection

Understanding machine learning models

Improving data analysis and decision-making

How Google uses logic in machine learning

Related collections

Similar ideas to Performance

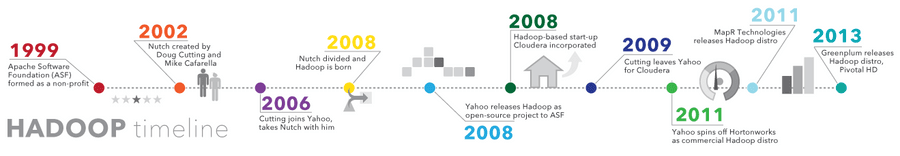

Big data Hadoop

- Ability to store and process huge amounts of any kind of data, quickly. With data volumes and varieties constantly increasing, especially from social media and the Internet of Things (IoT) , th...

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates