Fixing Multicollinearity

To fix Multicollinearity:

- If the Dataset is small, we can drop one independent variable. Or we can Transform them into One Variable (Eg. Average of two Variables)

- If the Dataset is large, we will use Ridge and Lasso Regression.

2

23 reads

CURATED FROM

IDEAS CURATED BY

These are some of the Assumptions to be pondered while Applying Ordinary Least Square Method and Performing Regression Analysis.

“

Similar ideas to Fixing Multicollinearity

Machine Learning Explained

Machine Learning is the process of letting your machine use the data to learn the relationship between predictor variables and the target variable. It is one of the first steps toward becoming a data scientist.

There are two kinds of Machine Learning: supervised, and unsupervised learning....

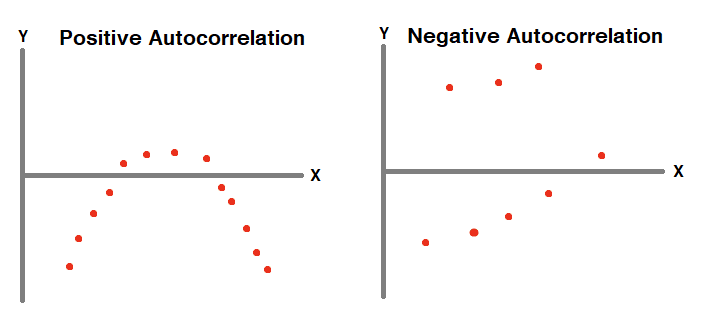

Autocorrelation Detection

To detect autocorrelation

- Plot all points and check for patterns or

- Use Durbin - Watson test.

There is no remedy for Autocorrelation. Instead of linear regression, we can use

- Autoregressive Models.

- Moving Average Models.

What is a Variable?

A variable is an element, feature, or factor that can be measured or quantified. It represents a concept that can vary and is essential for data collection and analysis in research.

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates