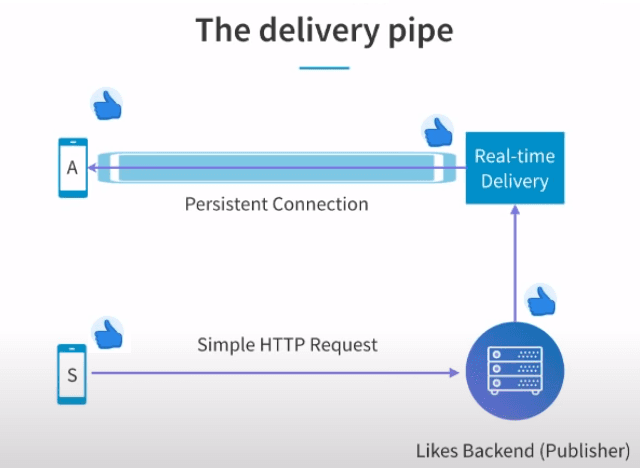

Challenge 1: The Delivery Pipe

- User devices have a persistent connection to the Realtime Platform servers.

- The servers use server-sent events to stream data fast on this connection via the EventSource interface.

A persistent connection is an HTTP Long Poll i.e. a regular HTTP connection where the server doesn't disconnect it.

8

91 reads

CURATED FROM

IDEAS CURATED BY

Alt account of @ocp. I use it to stash ideas about software engineering

The idea is part of this collection:

Learn more about computerscience with this collection

Understanding machine learning models

Improving data analysis and decision-making

How Google uses logic in machine learning

Related collections

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates