Streaming a Million Likes/Second: Real-Time Interactions on Live Video

Curated from: InfoQ

Ideas, facts & insights covering these topics:

11 ideas

·1.22K reads

4

Explore the World's Best Ideas

Join today and uncover 100+ curated journeys from 50+ topics. Unlock access to our mobile app with extensive features.

The Realtime Platform

LinkedIn has built the Realtime Platform to distribute multiple types of data in real-time such as:

- Likes, comments and viewer count for Live Videos

- Typing indicators and Read receipts for Instant Messaging

- Presence i.e. the green online indicators

Their goal is to increase user engagement by enabling dynamic instant experiences between users, such as: likes, comments, polls, discussions etc.

7

124 reads

Interactive Live Videos

Having a lot of people interact on live videos poses many technical challenges. Mainly because viewers generate a lot of interactions that need to be delivered fast.

To get a sense of the scale, the top live streams in the world gathered millions of concurrent users:

- Cricket World Cup Semifinal 2019 - 25M concurrent viewers

- British Royal Wedding 2018 - 18M concurrent viewers

8

126 reads

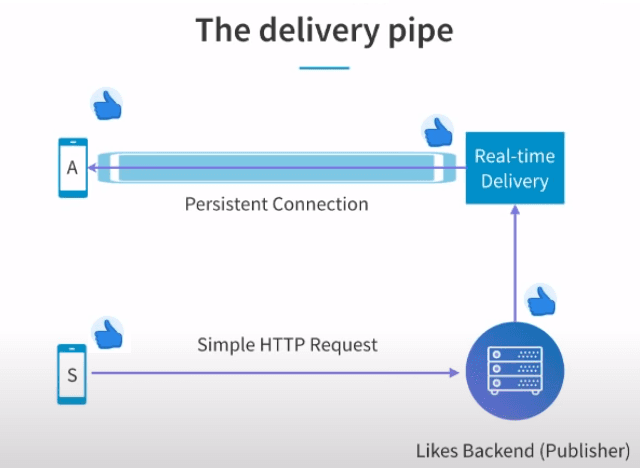

Challenge 1: The Delivery Pipe

- User devices have a persistent connection to the Realtime Platform servers.

- The servers use server-sent events to stream data fast on this connection via the EventSource interface.

A persistent connection is an HTTP Long Poll i.e. a regular HTTP connection where the server doesn't disconnect it.

8

91 reads

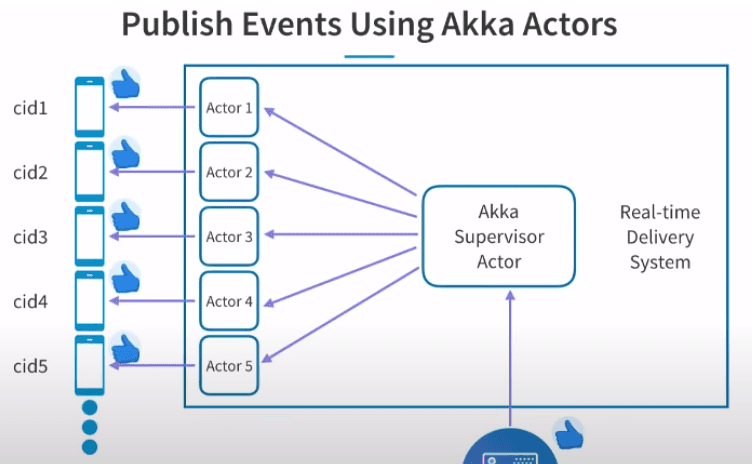

Challenge 2: Connection Management

- Each connection is managed by an Akka actor.

- Actors are so lightweight that there can be millions of them on a single system. Moreover, all of them can be served by a small number of threads, proportional to the number of cores. This is possible because a thread is assigned to an actor only when it has work to do.

- Actors are managed by an Akka supervisor actor that sends them events (likes, comments etc.) which need to be forwarded to user devices.

Akka is a toolkit for building highly concurrent, distributed, and resilient message-driven apps.

8

45 reads

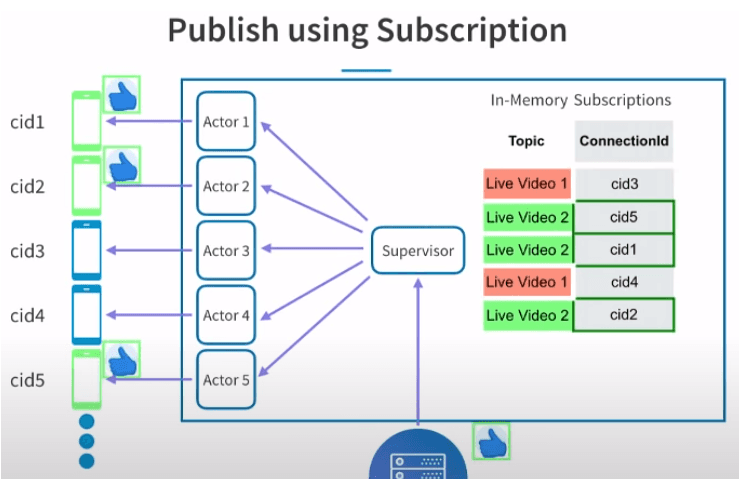

Challenge 3: Multiple Live Videos

- Clients are subscribing to events for a particular live video i.e. they are telling the server which live video they are watching.

- The Frontend server stores all subscriptions in an in-memory table.

- Every time a new event is published, the supervisor actor does a lookup in the in-memory table to determine which actors need to receive this event.

8

62 reads

Challenge 4: 10K Concurrent Viewers

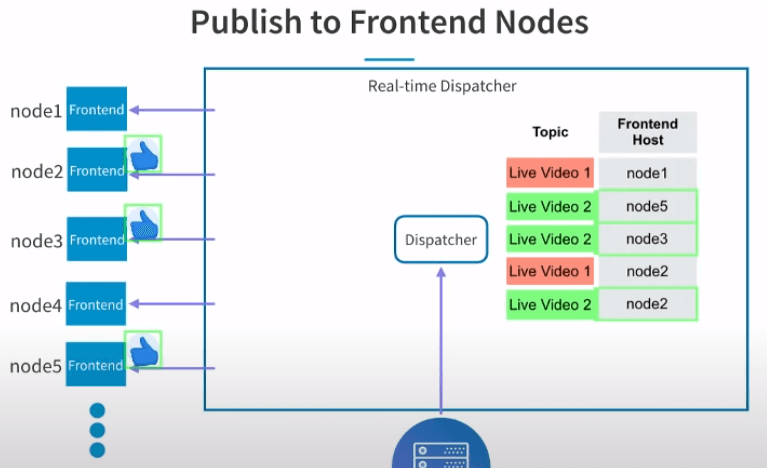

- Scale horizontally to handle more concurrent viewers -> Add multiple Frontend nodes and coordinate them using a Dispatcher node.

- In a similar fashion to the Frontend node, the Dispatcher has a subscriptions table to know which frontend nodes should receive which events.

- This table is populated when Frontend nodes send subscription requests to tell the Dispatcher which live videos they're interested in (i.e. which live videos its connections are subscribed to).

9

57 reads

Challenge 5: 100 Likes/second

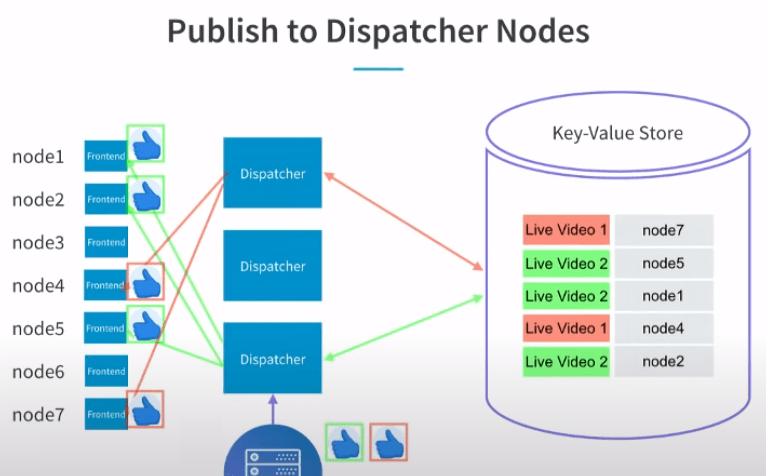

- Scale horizontally again to handle more events -> Add multiple Dispatchers and move the subscription table into a key-value store so it's accessible to all Dispatchers.

- Dispatchers are independent from Frontend nodes and don't have persistent connections between them.

- Any Frontend node can subscribe to any Dispatcher.

- Any Dispatcher can publish events to any Frontend node.

9

77 reads

Final architecture

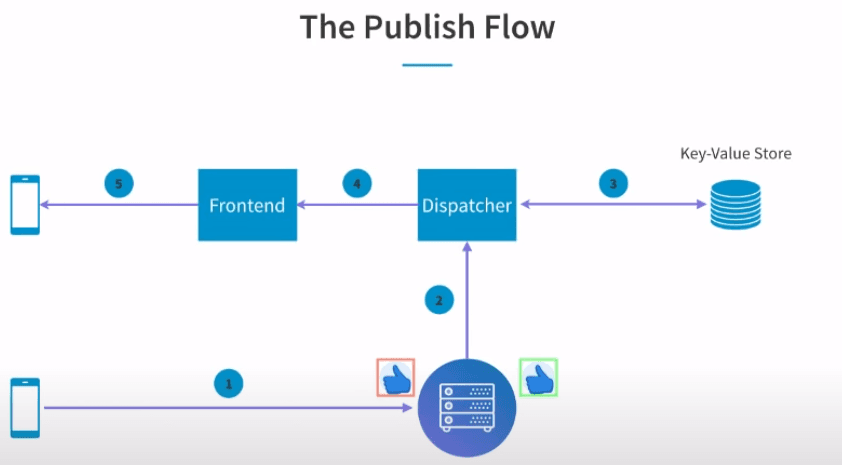

- A viewer likes a video and sends an HTTP request to the Likes backend, which stores it in a database.

- The backend forwards the like to any random Dispatcher node using an HTTP request.

- Dispatcher looks up in the key-value store to find out which Frontend nodes are subscribed to likes from that video.

- Dispatcher sends the like to the corresponding Frontend nodes.

- Frontend nodes send the like to client devices which are subscribed to that video.

12

328 reads

Bonus Challenge: Multiple data-centers

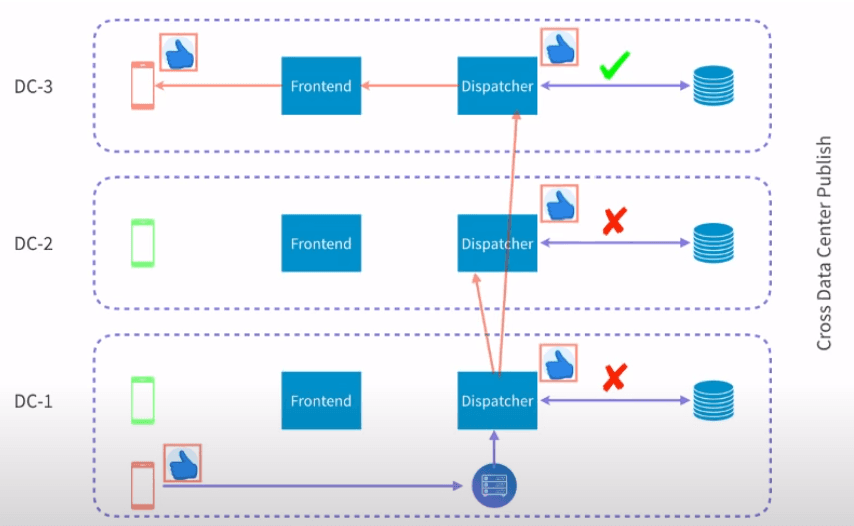

Expanding to other regions requires two steps:

- Replicate the setup in each data-center.

- Have the Dispatcher broadcast the events to its peer Dispatchers from the other data-centers.

8

60 reads

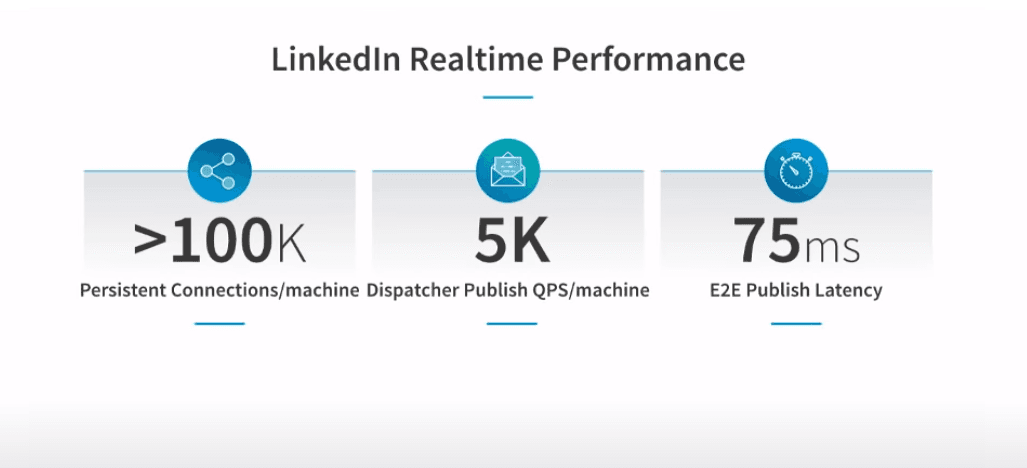

Performance and scale

- Each Frontend node handles 100k persistent connections. It handles only this many connections because the server is doing a lot of work processing multiple types of data (likes, comments, instant messaging etc.).

- Each Dispatcher can publish 5k events per second to the Frontend nodes.

- End-to-end Publish Latency is 75ms at p90, from the moment the Like is received until the Like is sent to a viewer. There are only two lookups in the subscriptions tables and a series of network calls.

- The system is completely horizontally scalable. You can add more Dispatchers and Frontend nodes to handle more viewers and more events.

8

50 reads

Related posts

- How LinkedIn displays Presence indicators in real-time: https://engineering.linkedin.com/blog/2018/01/now-you-see-me--now-you-dont--linkedins-real-time-presence-platf

- How LinkedIn measures end-to-end latency across systems: https://engineering.linkedin.com/blog/2018/04/samza-aeon--latency-insights-for-asynchronous-one-way-flows

- How LinkedIn scaled one server to handle hundreds of thousands of persistent connections: https://engineering.linkedin.com/blog/2016/10/instant-messaging-at-linkedin--scaling-to-hundreds-of-thousands-

10

209 reads

IDEAS CURATED BY

Alt account of @ocp. I use it to stash ideas about software engineering

Ovidiu Podariu (Tech)'s ideas are part of this journey:

Learn more about computerscience with this collection

Understanding machine learning models

Improving data analysis and decision-making

How Google uses logic in machine learning

Related collections

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates