What is all the fuss about GPT-3?

Ideas, facts & insights covering these topics:

13 ideas

·1.43K reads

8

Explore the World's Best Ideas

Join today and uncover 100+ curated journeys from 50+ topics. Unlock access to our mobile app with extensive features.

Get The Basics of GPT-3

GPT-3 can be understood by understanding a few keywords. These are:

- Artificial Intelligence (AI)

- Machine learning (ML)

- Deep Learning (DL)

- Language Model (LM)

- Autoregressive (AR) Model

- Natural Language Processing (NLP)

- OpenAI

Once you get a hold of these terms, it will be easy to understand GPT-3.

11

155 reads

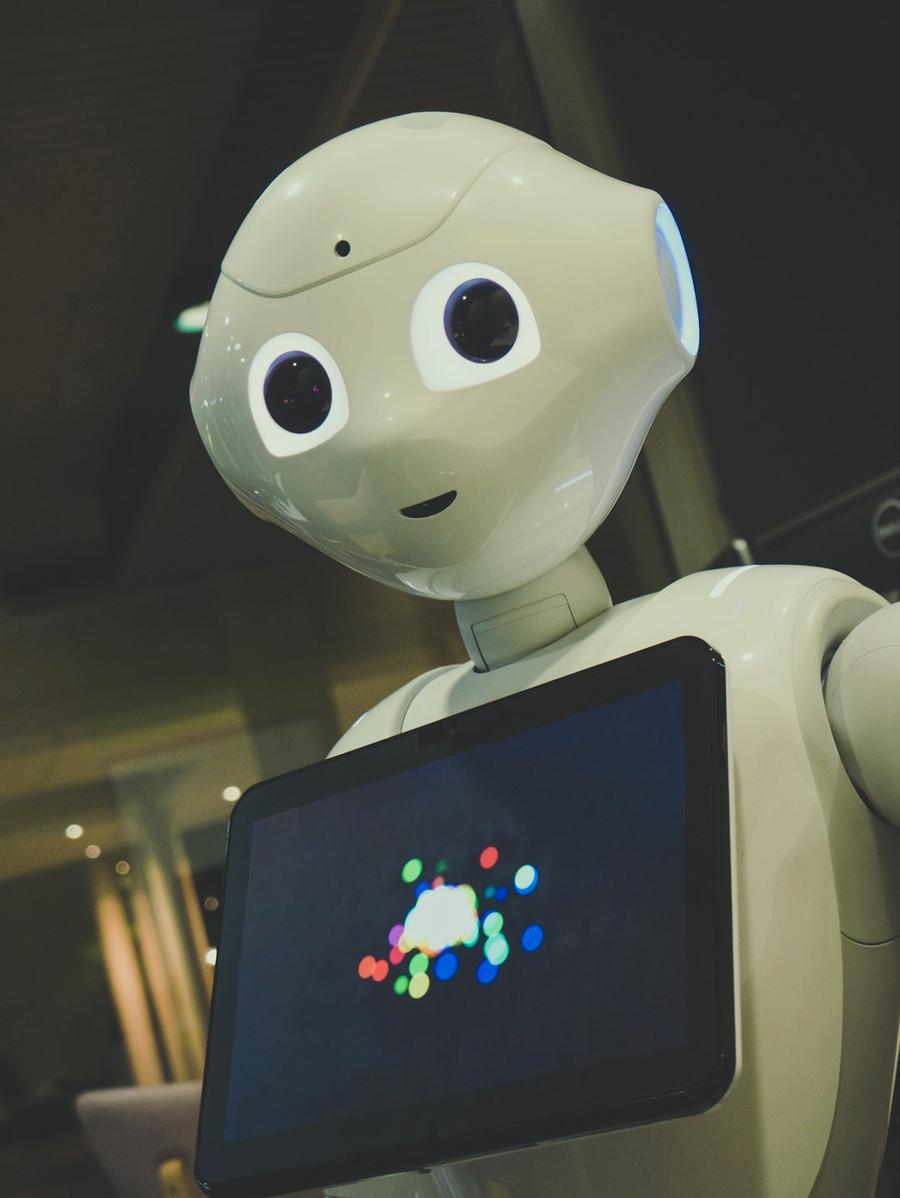

Artificial Intelligence (AI) in GPT-3

AI is when machines do things that are normally done by people like learning and problem-solving.

10

134 reads

Machine learning (ML) in GPT-3

Machine learning is a field of artificial intelligence that focuses on understanding and creating methods that "learn". This means that the methods get better at doing certain tasks as they get more data.

10

121 reads

Deep Learning (DL) in GPT-3

Deep learning is a type of machine learning where you teach a computer to learn by itself. This type of learning can be supervised, semi-supervised, or unsupervised.

9

124 reads

Language Model (LM) in GPT-3

A language model is a mathematical way of predicting how words will be said next, based on the probability of different word combinations.

Language models are probability distributions over a sequence of words.

They are used for many different tasks, like Part of Speech (PoS) Tagging, Machine Translation, Text Classification, Speech Recognition, Information Retrieval, and News Article Generation.

11

109 reads

Autoregressive (AR) Model in GPT-3

An AR model is a way to describe a random process. This is used to help understand time-varying processes in things like nature and economics.

9

123 reads

Natural Language Processing (NLP) in GPT-3

NLP is a way to help computers understand human language. It is a subfield of linguistics, computer science, artificial intelligence, and information engineering.

10

110 reads

OpenAI in GPT-3

OpenAI is a research lab that studies artificial intelligence. It was founded in 2015 and is funded by donations from people like Elon Musk and Microsoft.

9

101 reads

GPT-3 explanation for the layman

It stands for Generative Pre-trained Transformer 3 (GPT-3).

GPT-3 is a computer program that can create text that looks like it was written by a human. This program is gaining popularity because it can also create code, stories, and poems.

GPT-3 has gained a lot of attraction in the area of natural language processing (NLP- – an essential sub-branch of data science).

8

102 reads

Breakdown of Generative || Pre-trained || Transformer

Generative models are a type of statistical model which are used to create new data by understanding the relationships between different variables.

Pre-trained models are models that have already been trained on a large dataset. This allows them to be used for tasks where it would be difficult to train a model from scratch. A pre-trained model may not be 100% accurate, but it can save you time and improve performance.

Transformer model is a deep learning model that is used for tasks such as machine translation and text classification. It is designed to handle sequential data, such as text.

9

83 reads

Creation of GPT-3

OpenAI, a research lab in San Francisco, created a deep learning model that is 175 billion parameters (permutations and combinations) and can produce human-like text.

It was trained on large text datasets with hundreds of billions of words.

8

94 reads

GPT-3 is way better than its predecessor

Language models prior to GPT-3 were designed to perform a specific NLP task, such as generating text, summarizing, or classifying. First, of its kind, GPT-3 is a generalized language model that can perform equally well on a wide range of NLP tasks.

8

92 reads

How does GPT-3 work?

There are two kinds of machine learning: supervised and unsupervised.

Supervised learning is when you have a lot of data that is carefully labeled so the machine can learn how to produce outputs for particular inputs.

Unsupervised learning is when the machine is exposed to a lot of data but doesn't have any labels.

GPT-3 is an unsupervised learner and it learned how to write by analyzing a lot of unlabeled data, like Reddit posts, Wikipedia articles, and news articles.

10

83 reads

IDEAS CURATED BY

CURATOR'S NOTE

Having spent weeks attempting to understand what GPT-3 is, I have finally come up with my own simple explanation. Hope this makes sense to you.

“

Similar ideas

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates