Breakdown of Generative || Pre-trained || Transformer

Generative models are a type of statistical model which are used to create new data by understanding the relationships between different variables.

Pre-trained models are models that have already been trained on a large dataset. This allows them to be used for tasks where it would be difficult to train a model from scratch. A pre-trained model may not be 100% accurate, but it can save you time and improve performance.

Transformer model is a deep learning model that is used for tasks such as machine translation and text classification. It is designed to handle sequential data, such as text.

9

83 reads

CURATED FROM

IDEAS CURATED BY

Having spent weeks attempting to understand what GPT-3 is, I have finally come up with my own simple explanation. Hope this makes sense to you.

“

Similar ideas to Breakdown of Generative || Pre-trained || Transformer

Transfer learning and fine tuning

Transfer learning consists of taking features learned on one problem, and leveraging them on a new, similar problem. For instance, features from a model that has learned to identify racoons may be useful to kick-start a model meant to identify tanukis.

- Take layers from...

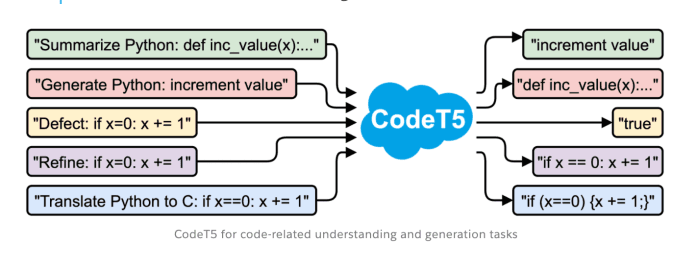

The Salesforce team has created and open-sourced a new identifier-aware unified pre-trained encoder-decoder model called CodeT5 . So far, they have demonstrated state-of-the-art results in multiple code-related downstream tasks ...

Transfer learning concept

The biggest problem, thoug h, is that models like this one are performed only on a single task. Future tasks require a new set of data points as well as equal or more amount of resources.

Transfer learning is an approach in deep learning (and machine learning) where knowledge is ...

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates