OpenAI's CLIP is the most important advancement in computer vision this year

Curated from: blog.roboflow.com

2

Explore the World's Best Ideas

Join today and uncover 100+ curated journeys from 50+ topics. Unlock access to our mobile app with extensive features.

CLIP is a gigantic leap forward, bringing many of the recent developments from the realm of natural language processing into the mainstream of computer vision: unsupervised learning, transformers, and multimodality to name a few. The burst of innovation it has inspired shows its versatility.

And this is likely just the beginning. There has been scuttlebutt recently about the coming age of "foundation models" in artificial intelligence that will underpin the state of the art across many different problems in AI; I think CLIP is going to turn out to be the bedrock model for computer vision.

7

47 reads

What is CLIP?

In a nutshell, CLIP is a multimodal model that combines knowledge of English-language concepts with semantic knowledge of images.

It can just as easily distinguish between an image of a "cat" and a "dog" as it can between "an illustration of Deadpool pretending to be a bunny rabbit " and "an underwater scene in the style of Vincent Van Gogh " (even though it has definitely never seen those things in its training data). This is because of its generalized knowledge of what those English phrases mean and what those pixels represent.

8

51 reads

This is in contrast to traditional computer vision models which disregard the context of their labels (in other words, a "normal" image classifier works just as well if your labels are "cat" and "dog" or "foo" and "bar"; behind the scenes it just converts them into a numeric identifier with no particular meaning).

In real world tasks, the "glyphs" are actually patterns of pixels (features) representing abstractions like colors, shapes, textures, and patterns (and even concepts like people and locations ).

7

31 reads

Use Cases

One of the neatest aspects of CLIP is how versatile it is. When introduced by OpenAI they noted two use-cases: image classification and image generation . But in the 9 months since its release it has been used for a far wider variety of tasks.

7

47 reads

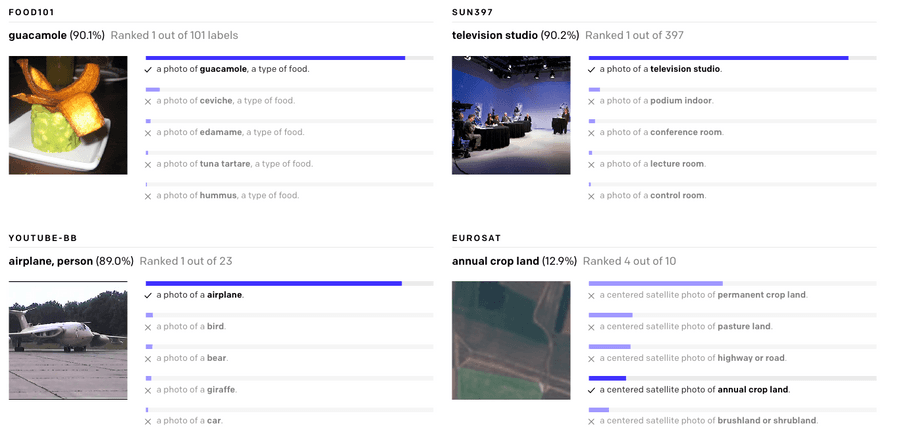

Image classification

OpenAI originally evaluated CLIP as a zero-shot image classifier. They compared it against traditional supervised machine learning models and it performed nearly on par with them without having to be trained on any specific dataset.

One challenge with traditional approaches to image classification is that you need lots of training examples that closely resemble the distribution of the images it will see in the wild. Because of this, CLIP does better on this task the less training data there is available.

7

25 reads

Image Generation

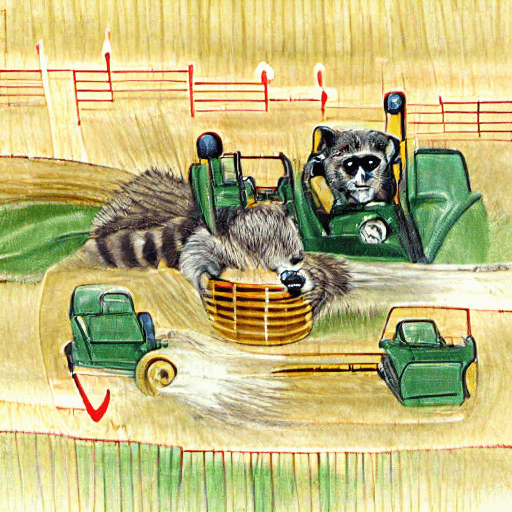

DALL-E was developed by OpenAI in tandem with CLIP. It's a generative model that can produce images based on a textual description; CLIP was used to evaluate its efficacy.

The DALL-E model has still not been released publicly, but CLIP has been behind a burgeoning AI generated art scene. It is used to "steer" a GAN (generative adversarial network) towards a desired output. The most commonly used model is Taming Transformers' CLIP+VQGAN which we dove deep on here .

7

48 reads

Content Moderation

One extension of image classification is content moderation. If you ask it in the right way , CLIP can filter out graphic or NSFW images out of the box. We demonstrated content moderation with CLIP in a post here .

7

101 reads

Image Search

Because CLIP doesn't need to be trained on specific phrases, it's perfectly suited for searching large catalogs of images. It doesn't need images to be tagged and can do natural language search.

Yurij Mikhalevich has already created an AI-powered command image line search tool called rclip . It wouldn't surprise me if CLIP spawns a Google Image Search competitor in the near future.

7

31 reads

Image Similarity

Apple's Neuralhash semantic image similarity algorithm has been in the news a lot recently for how they're applying it to scanning user devices for CSAM. We showed how you can use CLIP to find similar images in the exact same way Apple's Neuralhash works.

The applications of being able to find similar images go far beyond scanning for illegal content, though. It could be used to search for copyright violations, create a clone of Tineye , or an advanced photo library de-duplicator.

7

22 reads

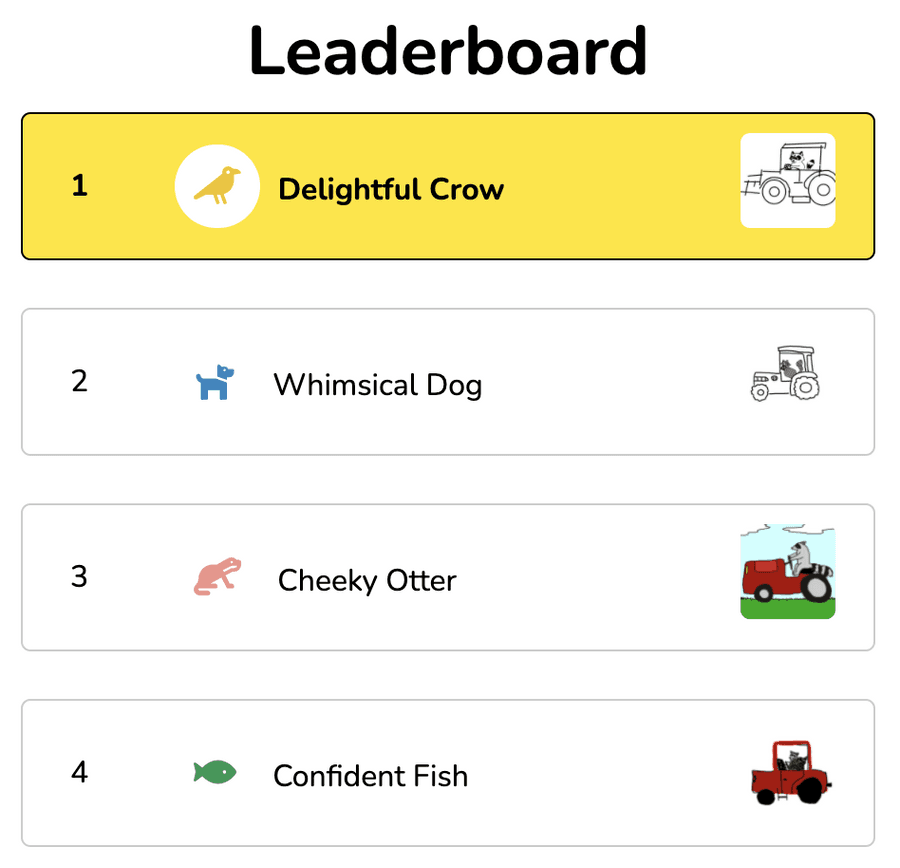

Image Ranking

It's not just factual representations that are encoded in CLIP's memory. It also knows about qualitative concepts as well (as we learned from the Unreal engine trick ).

We used this to create a CLIP judged Pictionary-style game , but you could also use it to create a camera app that "scores" users' photos by "searching" for phrases like "award winning photograph" or "professional selfie of a model" to help users decide which images to keep and which ones to trash, for example.

7

15 reads

Object Tracking

As an extension of image similarity, we've used CLIP to track objects across frames in a video . It uses an object detection model to find items of interest then crops the image and uses CLIP to determine if two detected objects are the same or difference instance of that object across different frames of a video.

8

18 reads

Fine-Tuning CLIP

Unfortunately, for many hyper-specific use-cases (eg examining the output of microchip lithography) or identifying things invented since CLIP was trained in 2020 (for example, the unique characteristics of CLIP+VQGAN creations), CLIP isn't capable of performing well out of the box for all problems. It should be possible to extend CLIP (essentially using it as a fantastic checkpoint for transfer learning) with additional data.

7

12 reads

Captioning

We've used CLIP along with GANs to convert text into images; there's no reason we can't go in the other direction and create rich captions for images with creative usage of CLIP (possibly along with a language model like GPT-3).

7

11 reads

Video Indexing

If you can classify images, it should be doable to classify frames of videos. In this way you could automatically split videos into scenes and create search indexes. Imagine searching YouTube for your company's logo and magically finding all of the places where someone happened to have used your product.

8

27 reads

IDEAS CURATED BY

Decebal Dobrica's ideas are part of this journey:

Learn more about artificialintelligence with this collection

Find out the challenges it poses

Learn about the potential impact on society

Understanding the concept of Metaverse

Related collections

Similar ideas

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates