Expensive to train?

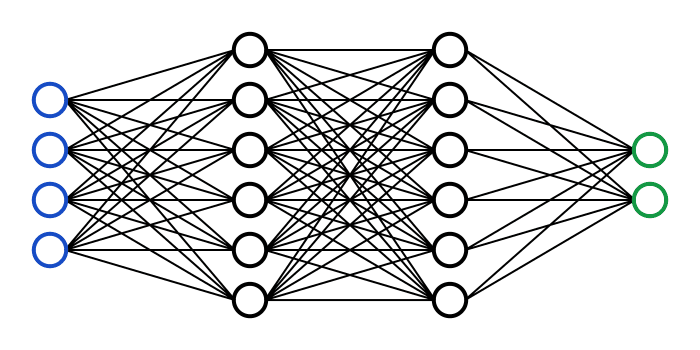

Some neural networks are very expensive to train. This led to the popularization of an approach known as pre-training, whereby a neural network is first trained on a large general-purpose dataset using significant amounts of computational resources, and then fine-tuned for the task at hand using a much smaller amount of data and compute resources.

19

259 reads

CURATED FROM

IDEAS CURATED BY

The idea is part of this collection:

Learn more about startup with this collection

How to analyze churn data and make data-driven decisions

The importance of customer feedback

How to improve customer experience

Related collections

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates