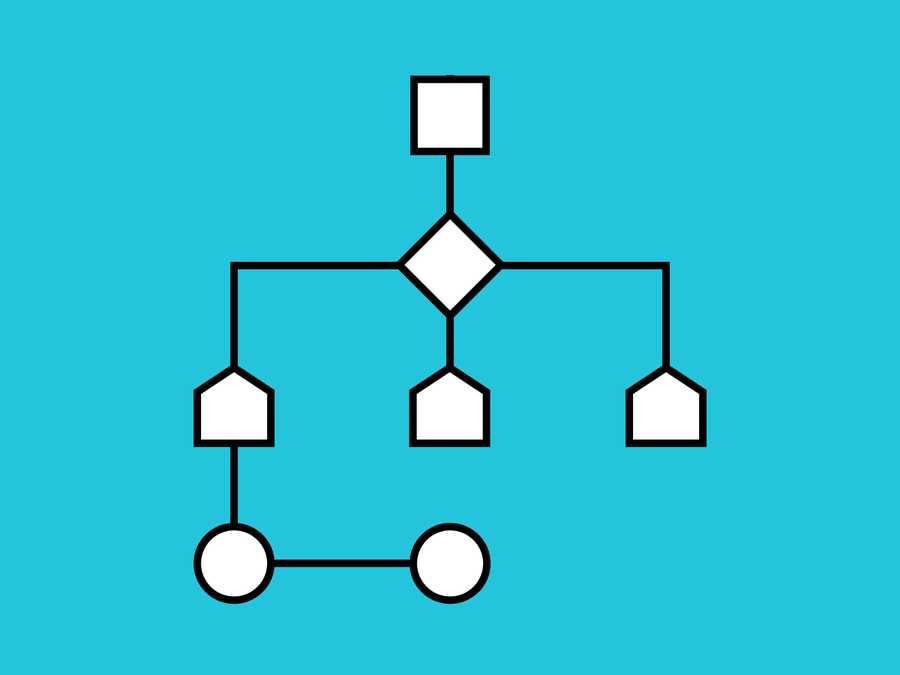

An algorithm is a set of instructions

The instructions tell a computer how to transform a set of facts into useful information.

The facts are data. The useful information is knowledge for people, instructions for machines or input for another algorithm. Typical examples are sorting sets of numbers or finding routes through maps.

123

991 reads

CURATED FROM

IDEAS CURATED BY

The idea is part of this collection:

Learn more about problemsolving with this collection

Understanding the importance of decision-making

Identifying biases that affect decision-making

Analyzing the potential outcomes of a decision

Related collections

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates