CURATED FROM

IDEAS CURATED BY

The idea is part of this collection:

Learn more about personaldevelopment with this collection

How to find common interests

How to be a good listener

How to overcome social anxiety

Related collections

Similar ideas to Reading pdf

Make Big Goals Easy To Achieve

Write down your big goal – e.g. I want to be fit enough to run 10km.

Break this down into smaller self contained goals such as;

- Nutrition

- Equipment

- Building stamina

Break each of those goals into simple daily tasks to complete. For building stamina th...

Agile Estimation and Planning

1. Planning Poker: All participants use numbered playing cards and estimate the items. Voting is done anonymous and discussion is raised when there are large differences. Voting is repeated till the whole team reached consensus about the accurate estimation. Planning poker works well when you hav...

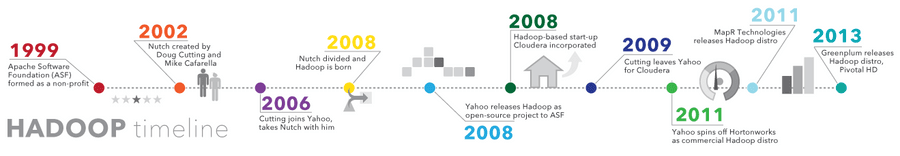

Big data Hadoop

- Ability to store and process huge amounts of any kind of data, quickly. With data volumes and varieties constantly increasing, especially from social media and the Internet of Things (IoT) , th...

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates