AI: Scaling Solutions Vs Risks

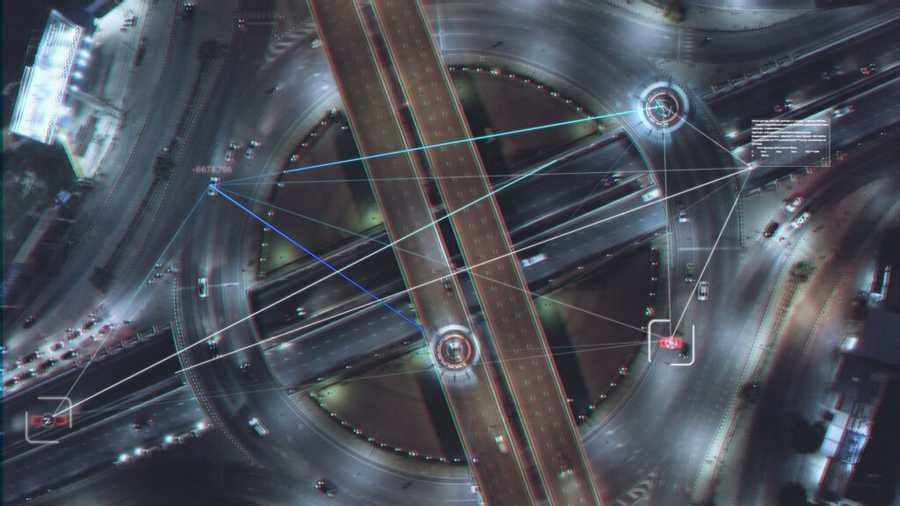

Companies are leveraging data and artificial intelligence to create scalable solutions — but they’re also scaling their reputational, regulatory, and legal risks.

- Los Angeles is suing IBM for allegedly misappropriating data it collected with its ubiquitous weather app.

- Optum is being investigated by regulators for creating an algorithm that allegedly recommended that doctors and nurses pay more attention to white patients than to sicker black patients.

- Goldman Sachs is being investigated by regulators for using an AI algorithm that allegedly discriminated against women by granting larger credit limits to men than women on their Apple cards.

- Facebook infamously granted Cambridge Analytica, a political firm, access to the personal data of more than 50 million users.

26

276 reads

CURATED FROM

IDEAS CURATED BY

A lot of problems would disappear if we talked to each other more than talking about each other.

The idea is part of this collection:

Learn more about problemsolving with this collection

Proper running form

Tips for staying motivated

Importance of rest and recovery

Related collections

Similar ideas to AI: Scaling Solutions Vs Risks

Building ethical AI

Companies are leveraging data and artificial intelligence to create scalable solutions — but they’re also scaling their reputational, regulatory, and legal risks. For instance, Los Angeles...

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates