Standard Approaches To Data And AI Ethical Risk Mitigation

- The academic approach: this means spotting ethical problems, their sources, and how to think through them. But it unfortunately tends to ask different questions than businesses. The result is academic treatments that do not speak to the highly particular, concrete uses of data and AI.

- The “on-the-ground” approach: it knows to ask the business-relevant risk-related questions precisely because they are the ones making the products, but it lacks the skill, knowledge, and experience to answer ethical questions systematically, exhaustively, efficiently and institutional support.

- The high-level AI ethics principles: Google and Microsoft, for instance, trumpeted their principles years ago. The difficulty comes in operationalizing those principles. What, exactly, does it mean to be for “fairness?” Which metric is the right one in any given case, and who makes that judgment?

24

82 reads

CURATED FROM

IDEAS CURATED BY

A lot of problems would disappear if we talked to each other more than talking about each other.

The idea is part of this collection:

Learn more about problemsolving with this collection

Proper running form

Tips for staying motivated

Importance of rest and recovery

Related collections

Similar ideas to Standard Approaches To Data And AI Ethical Risk Mitigation

How to Operationalize Data and AI Ethics

- Identify existing infrastructure that a data and AI ethics program can leverage.

- Create a data and AI ethical risk framework that is tailored to your industry.

- Change how you think about ethics by taking cues from the successes in health care. Leaders should take inspiration...

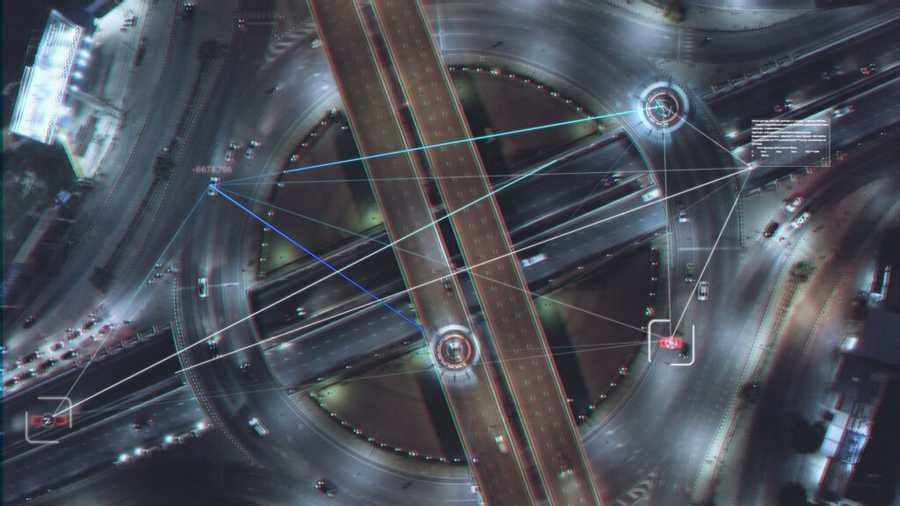

Building ethical AI

Companies are leveraging data and artificial intelligence to create scalable solutions — but they’re also scaling their reputational, regulatory, and legal risks. For instance, Los Angeles...

How to Solve an Ethical Dilemma?

The biggest challenge of an ethical dilemma is that it does not offer an obvious solution that would comply with ethics al norms.

The following approaches to solve an ethical dilemma were deduced:

- Refute the paradox (dilemma): The situation must be carefully ana...

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates