Is Google’s AI research about to implode?

Curated from: soccermatics.medium.com

Ideas, facts & insights covering these topics:

3 ideas

·720 reads

11

Explore the World's Best Ideas

Join today and uncover 100+ curated journeys from 50+ topics. Unlock access to our mobile app with extensive features.

The limits of AlphaGo or GPT-3

Although AI researchers can train systems to win at Space Invaders, it couldn’t play games like Montezuma Revenge where rewards could only be collected after completing a series of actions (for example, climb down ladder, get down rope, get down another ladder, jump over skull and climb up a third ladder).

For these types of games, the algorithms can’t learn because they require an understanding of the concept of ladders, ropes and keys. Something us humans have built in to our cognitive model of the world & that can’t be learnt by the reinforcement learning approach of DeepMind.

19

301 reads

Modeling & AI

Mathematical modelling consists of 3 components:

- Assumptions: These are taken from our experience and intuition to be the basis of our thinking about a problem.

- Model: This is the representation of our assumptions in a way that we can reason (i.e. as an equation or a simulation).

- Data: This is what we measure and understand about the real world.

Current AI is strong on the model (step 2): the neural network model of pictures & words. But this is just one model of many, possibly infinite many, alternatives. It is one way of looking at the world.

In emphasising the model researchers have a strong implicit assumption: that their model doesn’t need assumptions.But all models do.

20

182 reads

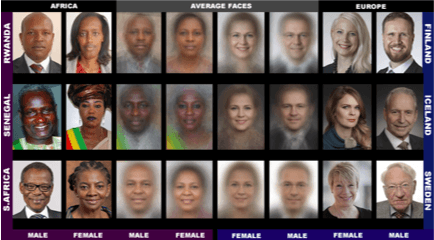

Neutrality in AI

True neutrality in language and image data is impossible.

If our text and image libraries are formed by and document sexism, systemic racism and violence, how can we expect to find neutrality in this data? We can’t.

If we use models that learn from Reddit with no assumed model, then our assumptions will come from Reddit.

20

237 reads

IDEAS CURATED BY

Life-long learner. Passionate about leadership, entrepreneurship, philosophy, Buddhism & SF. Founder @deepstash.

Vladimir Oane's ideas are part of this journey:

Learn more about philosophy with this collection

The historical significance of urban centers

The impact of cultural and technological advances

The role of urban centers in shaping society

Related collections

Similar ideas

5 ideas

How to Use Massive AI Models (Like GPT-3) in Your Startup

future.a16z.com

6 ideas

How to Use Massive AI Models (Like GPT-3) in Your Startup

future.a16z.com

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates