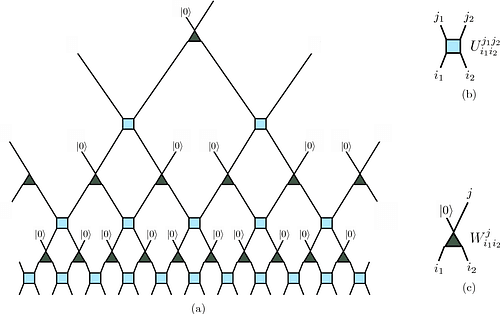

And it has the familar parts of your classical CNN:

1.Convolutional layers : Instead of kernals, you have gates that are applied to the qubits adjacent to it

2.Pooling Layers : Where you just measure half of the qubits and kick out the rest

3.Fully Connected Layer: Just like the normal one

If you’re a super quantum nerd you might have noticed that this architechture might have some resemblence to a reverse MERA (Multi-scale Entanglement Renormalization Ansatz).

A normal MERA takes 1 qubit and then exponentially increases the number of qubits by introducing new qubits into the circuit.

But in the reverse MERA, we’re doing the

29

143 reads

CURATED FROM

IDEAS CURATED BY

卐 || एकं सत विप्रा बहुधा वदन्ति || Enthusiast || Collection Of Some Best Reads || Decentralizing...

The idea is part of this collection:

Learn more about artificialintelligence with this collection

How to build trust and respect with team members

How to communicate effectively

How to motivate and inspire others

Related collections

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates