4 Cognitive Biases In AI/ML Systems

Curated from: pub.towardsai.net

Ideas, facts & insights covering these topics:

9 ideas

·903 reads

8

Explore the World's Best Ideas

Join today and uncover 100+ curated journeys from 50+ topics. Unlock access to our mobile app with extensive features.

4 Cognitive Biases In AI/ML Systems

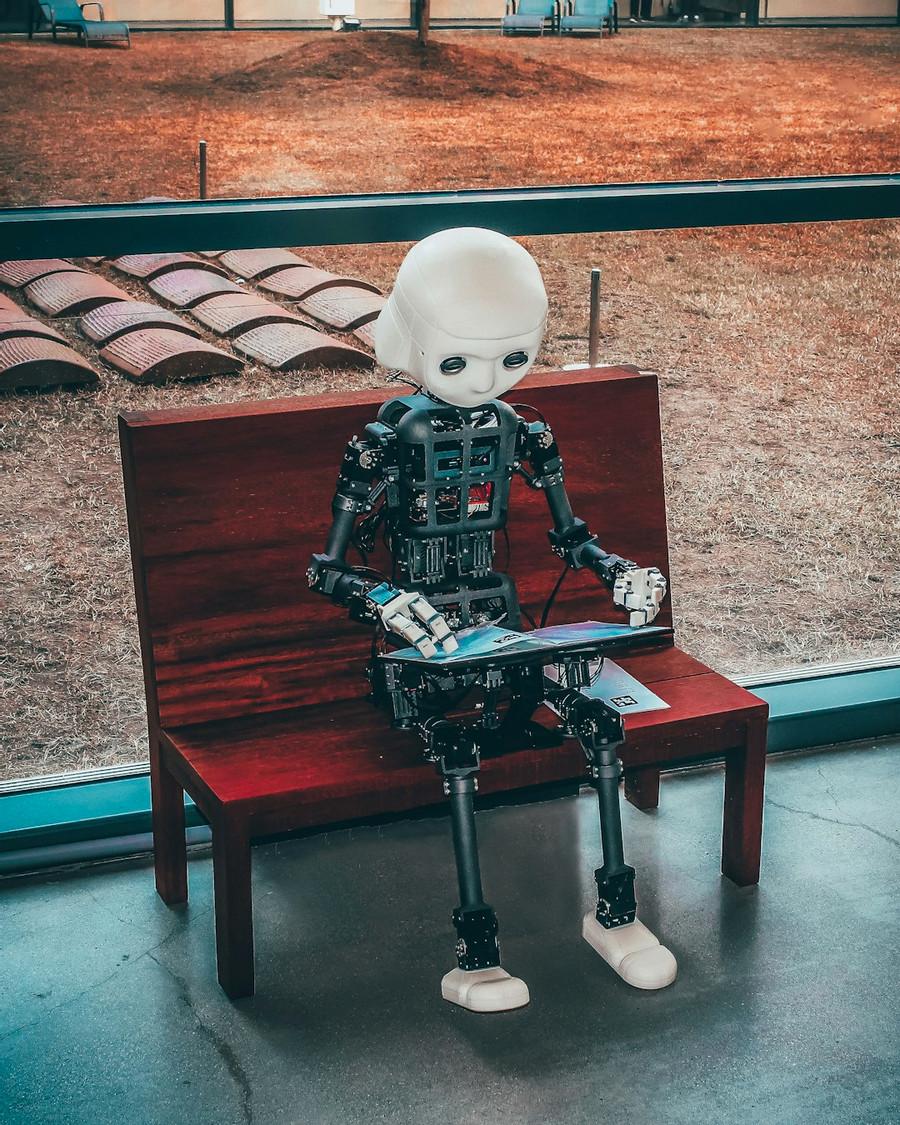

With the rapid proliferation of advanced technology, more and more systems are now equipped with artificial intelligence and machine learning algorithms.

However, can we objectively say that these systems are truly fair and unbiased?

In this stash, I share 4 cognitive biases that AI/ML systems have and how machines could be full of biases because the engineers who built them are inherently imperfect.

10

162 reads

Selection Bias

- Selection bias refers to the selection of training/testing data that is not representative of the entire population.

- Example: An engineer chooses the first 100 volunteers who responded to his email as his training data

- The problem: The first 100 respondents may be more enthusiastic about a product or study than the last 100 respondents. By explicitly choosing the first 100 respondents, the engineer introduces unfairness in his data collection method.

- The solution: select a random sample of 100 users from your pool of email respondents instead of the first 100.

9

124 reads

Reporting Bias (1)

- Reporting bias refers to people’s (conscious and unconscious) tendencies to suppress the information they report.

- Example: Many amazon products have more 5-star and 1-star reviews than 2, 3, or 4-star reviews because people who have extreme experiences (either positive or negative) are more likely to post a review than those who have neutral experiences.

9

109 reads

Reporting Bias (2)

- The problem: An engineer that uses online reviews as their primary data source may create an AI model that is great at detecting extreme sentiments but not so much at detecting more neutral, subtle sentiments.

- The solution: Consider broadening the data collection scope to account for the underrepresented data.

10

110 reads

Implicit Bias

- Implicit bias refers to people’s unconscious tendencies to make assumptions/associate stereotypes with others.

- Example: An engineer who is often bitten by dogs believes that dogs are more aggressive than cats, even though that may not be scientifically true.

- The problem: The engineer would believe that the ground truth is “dogs = aggressive” and thus fine-tuned her AI model to label dogs as being more aggressive than cats.

- The solution: As implicit bias is unconscious to an individual, having multiple engineers code the AI and establishing proper peer review procedures would reduce such biases

9

86 reads

Framing Bias

- Framing bias refers to people’s tendency to be influenced based on how information is presented.

- Example: An engineer who sees a dark and dull website for a product believes that the product must have poor sales, ignoring the actual positive sales number of the product.

- The problem: In designing the AI algorithm, the engineer may take into account subjective variables, such as the color of a website, instead of focusing on objective metrics.

- The solution: Avoid subjective (usually qualitative) data and prioritize objective, factual data instead.

9

81 reads

Quick Recap Of 4 Cognitive Biases In AI/ML Systems

- Selection Bias (selecting data not representative of the entire population)

- Reporting Bias (people’s tendency to underreport information)

- Implicit Bias (people’s unconscious tendencies to assume)

- Framing Bias (people’s tendency to be affected by how information is presented)

11

81 reads

The 5 best practices to enhance objectivity in AI/ML systems

- Always select a random sample (instead of the first or last hundred data points)

- Verify your data sources through comparison with other data sources

- Assign more (diverse) engineers to develop the AI/ML system

- Establish proper peer review procedures to cross-examine logic and unconscious bias

- Prioritize objective and factual data over subjective (usually qualitative) data.

8

76 reads

Conclusion

As you can see, humans’ perceptions are deeply flawed and our imperfection may trickle down into the systems we build. By acknowledging such biases, however, we can optimize the AI systems we build and make them more fair and objective.

I hope you learned something new today. If you like what you’re reading, do drop a clap or a follow!

I’ll catch you in the next article. Cheers!

9

74 reads

IDEAS CURATED BY

Learner. Writer. Leader. I'm a tech enthusiast and love all-things productivity. 🚀🚀

CURATOR'S NOTE

In the ideal world, machines are unbiased and objective. However, the humans who engineer these machines are inherently biased.

“

Lye Jia Jun's ideas are part of this journey:

Learn more about problemsolving with this collection

Understanding the importance of constructive criticism

How to receive constructive criticism positively

How to use constructive criticism to improve performance

Related collections

Similar ideas

6 ideas

Tired of AI? Let’s talk about CI.

towardsdatascience.com

1 idea

Read & Learn

20x Faster

without

deepstash

with

deepstash

with

deepstash

Personalized microlearning

—

100+ Learning Journeys

—

Access to 200,000+ ideas

—

Access to the mobile app

—

Unlimited idea saving

—

—

Unlimited history

—

—

Unlimited listening to ideas

—

—

Downloading & offline access

—

—

Supercharge your mind with one idea per day

Enter your email and spend 1 minute every day to learn something new.

I agree to receive email updates